Yesterday in class I discussed how we can interpret the linear regression parameters (i.e., the y-intercept a (“alpha”) and the slope b (“beta”) yielding a linear regression line (or what we also call a “linear model”)

y = a + bx

See below for a summary (you can also take a look at the Khan Academy videos “Interpreting y-intercept in regression” and “Interpreting slope in regression“):

- Recall that the linear model is used to predict an “output” value y for a given “input” value x

- In terms of the line, the y-intercept a is the y-value where the line intersects the x-axis, i.e., when x = 0. Thus, in terms of the linear regression model, the y-intercept a is the predicted value of the dependent variable y when the independent variable x is 0.

- In terms of the line, the slope b is how much y increases or decreases if x is increased by 1. Thus, in terms of the linear regression model, the slope b is the predicted change in the dependent variable y if the independent variable x is increased by 1.

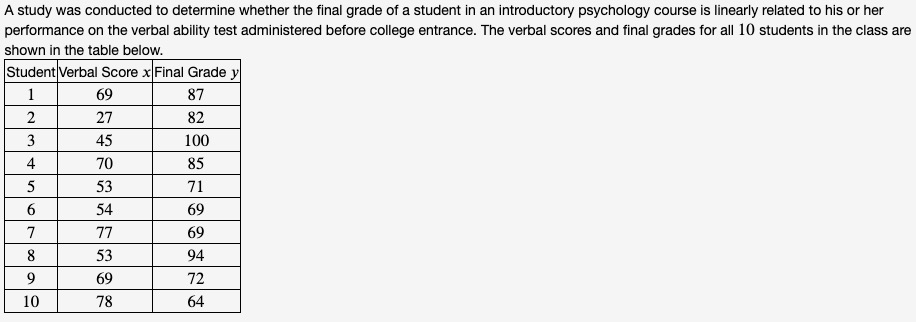

For example, this was an exercise on the “HW4-Paired Data” WebWork set:

The results of linear regression for this data set (i.e., regressing the dependent variable y (final grade) on the independent variable x (verbal score) yield the linear regression parameters:

- y-intercept a ≈ 99.1 ; this can be interpreted as the predicted final grade of a student who gets a verbal score of 0

- slope b ≈ -0.333 ; this can be interpreted as saying that a student who increases their verbal score by 1 will decrease their final grade by -0.333