Title: Tunewave

Keywords: Electroencephalography(EEG), Music Composition, Neurofeedback, Music software, Music Memory, Electroencephalophone

Abstract

The Tunewave is a device capable of reading one’s brain waves as a means of interpreting the music being “played” in one’s head and converting it to a more permanent form which can be played back at any time in the future. Furthermore, it would be able to project the music in real time, allowing for live “performances,” as well as for musicians to collaborate improvisationally both online and in person.

In order to make the Tunewave a reality, an enormous amount of research must be conducted on a wide variety of topics and fields. We must first understand how the brain works when listening to, interpreting and performing music, as well as when one is engaging in creative activities. This would require a great amount of experimentation with EEG devices such as the Emotiv, which work to interpret brain activity. Electroencephalography itself would need to be researched extensively, including the science behind it’s function as well as it’s practical applications. In terms of said practical applications, research would also need to be conducted into how brain activity monitoring technology can be used to translate the brain activity into commands, and into what one is “hearing” in their mind’s ear.

Research: Tangible

Electroencephalography, at least in it’s realized form, dates back to 1924, when a German psychiatrist produced the world’s first recorded EEG. The brain is a network of neurons, which are individual cells which communicate with one another electrically via an ionic current. These electrical impulses are detectable on the scalp via EEG. As the electrical impulse between one single neuron and another is far too faint to pick up, EEG devices instead pick up the collective electrical activity of several thousand neurons grouped within their own networks, producing the recorded results.

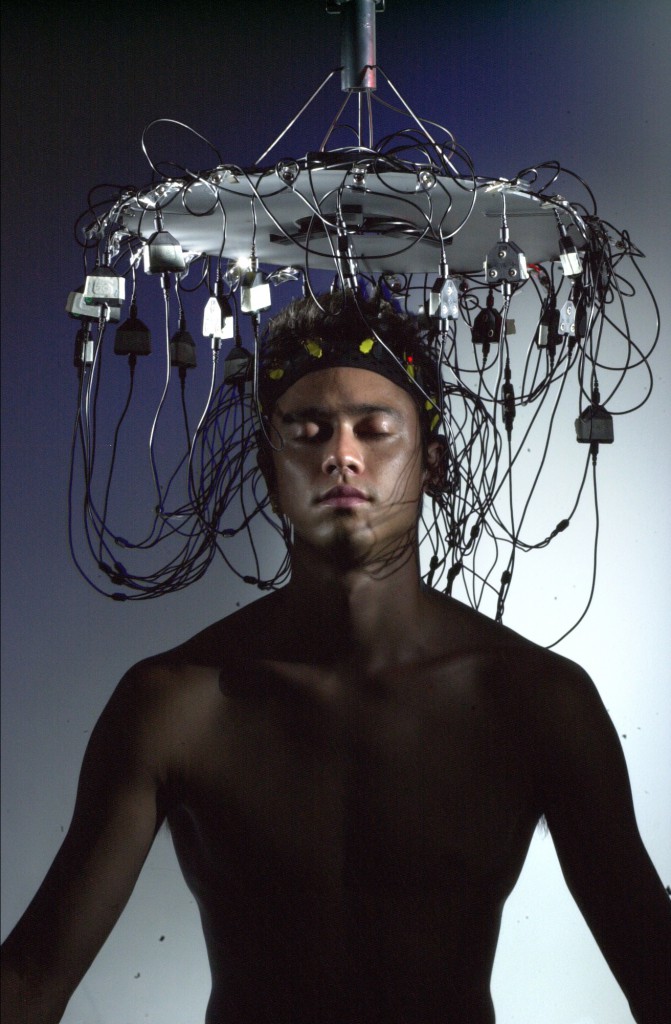

As research and information technology have progressed over the years, EEG devices have become far more complex. They work via upwards of 14 sensors positioned throughout the users scalp, each collecting data on electrical activity in different parts of the brain. Technology has progressed to the point of utility primarily in the fields of medicine and research. They’re most frequently used for monitoring and differentiating between different types of seizures, as well as detecting neural activity in patients with brain damage, and to monitor the affects of anesthesia.

Recent years have found further-reaching applications of EEG technology. As many people know, the language a computer “speaks” at it’s most basic level is that of binary code. From our perspective, the language our brains speak lies in the electrical communication between billions upon billions of neurons and neural networks. Linking these two concepts together gives us the concept of the brain-computer interface, or BCI.

Brain-computer interfaces may very well be the next technological frontier. Already we’re seeing iterations of the technology that only a few decades ago might have been seen as science fiction. In recent years, researchers have developed brain-computer interfaces which allow human and/or animal brains to operate a cursor on a screen, or even a robotic arm. The implications of these developments are massive, to say the least. To reiterate, brain-computer interfaces take information produced by the brain, interpret said information and then use it to accomplish tasks. While creating movement is one application, researchers are now able to create visual representations of activity in the neural cortex. As an example, in one experiment, cats hooked up with EEG were shown a series of short videos, who’s thalamus’s electrical activities were in turn interpreted by a computer, producing videos of what the brain has seen (picture below).

Clearly, sophisticated applications of EEG have begun to emerge. However, aural representations of electrical activity has yet to have been produced with such clarity. The furthest such applications have progressed are via devices called Electroencephalophones (one prototype pictured below) upon which I will elaborate futher in the following section.

Philosophical Research

While the concept of the electroencephalophone has been around since the 1940’s, the technology and devices bearing its name have yet to develop anywhere close to their full potential. In fact, it appears no device has gotten close to or near the level of complexity I am suggesting. In Pagan Kennedy’s New York Times article entitled “The Cyborg in Us All” (2011),Kennedy discusses current BCI research, among other things. He spends time with computer scientist Gerwin Schalk, who uses platinum electrode brain implants, called electrocorticographic (EcG) implants, to read and interpret brain activity. As most EEG devices only use the electrical information available on the scalp, the data received by devices implanted in one’s brain is far richer.

In the article, Schalk plays Pink Floyd’s “Another Brick in the Wall, Part 1” for a number of subjects outfitted with EcG implants. During moments of silence within the song, one would expect the brain activity in regards to the interpretation of the music to go silent. Surprisingly, this was not the case. In fact, the neural activity suggested that the subject’s brains were creating a model of where they thought the music would go. Schalk goes on to admit that this could mean we are not far off from creating actual music out of brain waves. That aside, Schalk is concerned mostly with using these electrodes in order to use neural data to produce words, allowing those who would otherwise not be able to speak instead communicate via recorded neural impulses. The US Army in fact gave Schalk a $6.3 million dollar grant to fund his research in hopes that he would produce a device which would allow soldiers to communicate via their brains.

This research has shown us that we in fact that the goals I am outlining here are not unattainable. In fact, while I have yet to come across any project seeking to create the device I am proposing, there are a number of past and ongoing projects which point in a similar direction. In 1971, Finnish designer, philosopher and artist Erkki Kurenniemi designed a synthesizer dubbed the DIMI-T. The deviced used a EEG device to monitor the alpha channel of a sleeping subject’s brainwaves as a means of controlling the pitch of the synthesizer. A More modern initiative called the Brainwave Music Lab seeks “to find interactive applications of brainwave music through various trial-and-error experiments.” While interesting and certainly relevant, the project by their own admission takes a quasi-scientific approach, which isn’t in line with the approach we must take in developing the Tunewave.

Full Project Description

The Tunewave will be an extremely multi-faceted project requiring an enourmous amount of research and trial-and-error. In order to create a clearer picture of what it’s development would entail, I’ll break the description down into sections, each describing a different component of the project. Each component is essentially an element of an interaction diagram of the device and it’s function: The brain(which requires no additional description within the scope of the project), the EEG Device, the computer, and the software. I will elaborate on each element below.

The EEG Device: It goes without saying that in order to utilize Electroencephalography, a device capable of reading the electrical impulses of brain activity must be involved. As previously mentioned however, current technology doesn’t allow for detailed enough readings without implanting electrodes in the brain, and even so, no device has yet been capable of achieving the level of detail required for this project. As such, the first stage of the project would be to develop EEG technology sensitive enough this level of detail via the scalp alone, without being too complex or unwieldy for the average person to use without significant and/or professional assistance. Emotiv Systems, the company behind the Emotive EEG device pictured below, is certainly on the right track.

Alternatively, if this device were created in a world where implants were the norm (it’s already being developed as a simple outpatient procedure) to be used in a variety of other applications, then this component of the device would be unnecessary. Unfortunately, that isn’t (yet) the world we live in.

The Computer: The EEG device would be connected via wires or wirelessly to a computer that must then interpret the data received. While this could just as easily be accomplished via one’s personal computer, the user should not have to rely on their laptop or desktop PC. Instead, as the computer’s job would only be to run one item of software, it would be a small device capable of being kept on one’s person. In the case that the computer is connected to one’s EEG device via a wired connection, this would also eliminate the hazard of a long wire which could be tripped accidentally and disrupt the performance. Needless to say, in order for the software to complete interpret such an enormous amount of information in real time, the computer would need to be extremely powerful, at least by today’s standards.

The Software: Perhaps the most complex element of the Tunewave would be the software responsible for interpreting the data received and producing an acceptable (read: amazing) result. This component of the device would have several components unto itself. It would first need to achieve a certain familiarity with the unique workings of the individual’s brain and how it functions. This would put the data received in context, making it easier to derive meaning. The following component would then interpret the data through a series of complex algorithms. From there, the final product of the data interpretation would then be projected via speakers and/or stored digitally for later playback.

Project Timeline

Assuming the timeline begins upon the return form spring break on April 10th, this will give me four weeks until the final presentation on May 8th. As such, I will allocate the tasks to these weeks. I will spend the first week researching how the brain works, and more specifically, what current science tells us about how the brain interprets music. The second week will involve very extensive research on the inner workings of Electroencephalography, how it works, how current devices are designed and being used, and what research is currently being done. The third week will be spent examining the research conducted in the first two weeks, and integrating the concepts and information to lay out a detailed description of what is required in order to realize my idea. The fourth week will be spent compiling my findings into a cohesive presentation, complete with pictures and videos. The two weeks thereafter will be spent preparing my deliverables.

Description of Deliverables

The first deliverable will obviously be the journal. In addition, I also plan to produce a series of detailed drawings of what the device will look like in its final form. I will be creating a number of interaction diagrams. The first will depict how the different components of the device relate to and communicate with one another. The other interaction diagrams will depict how a user will interact with the device and it’s various components in the context of a number of hypothetical scenarios. Another deliverable I plan to produce is an image (possibly interactive if I have enough time) of the regions of the brain, their functions, and the regions that will be monitored by the device with corresponding explanations. The final deliverable will be a user guide, divided up into sections for the Tunewave’s various applications: improvised music, performed music, and recording music to be played back later.

http://en.wikipedia.org/wiki/Eduardo_Reck_Miranda

work on music from fMRI

http://www.ni.com/white-paper/6130/en

control of wheelchair using EMG to capture subvocalizations