The installation retained the vision I had for it even though I had to cut down on some features due to time constraints and space. It managed to showcase the marriage of gestures and audio synthesis to create an entirely new experience. It taught me time managed and to set a deadline for each required task to be done instead of fitting into an already crowded schedule. It also taught me that ideation should never get in the way of actual implementation and you have to decide when enough is enough. I also learned how to use the leap motion in max which was a first and also how to work with jit.mo.

Building Process

After decided what I had to implement into the installation I had to program each part of the installation breaking them down into :-

Audio Engine

This was the audio part of the installation I had to map all the finger data I was getting from the leap motion to max and set the fingertip ids to their specific buffer and groove. The more fingers that were added the more complex it got because some fingers clashed with each other if they were on the same interval thus I had to set a different position in the Y-axis for each finger so they would not collide and output data that is relevant to their respect positions. I selected a group of samples that consisted of claps, kicks, hi-hats and snares and connected them to the same buffer that was associated with the fingers and used the finger data to trigger them. Each buffer had a collection of its on special samples so that there was a claps buffer, hi-hat buffer, kicks buffer etc. It made them more manageable and allowed for easy modifications since everything was modularized

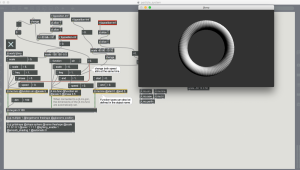

Particle system

The particle system was created using the help of jit.mo a max library that lets you create primitive shapes that you can be able to alter the scale, frequency, speed and even shape of the object. I used the tip position data I got from the leap motion to route the finger movements to alter the speed, frequency etc. Thus the audio engine was intertwined with the audio engine because they both needed each other to function. Motion in the Y direction over the leap motion caused the scale of the shape to go higher while motion down towards the leap made it shrink. Motion in the X either left or right direction decrease or increased its speed but also scaled the shape toward the left or right respectively.

Ideation

I had to breakdown the installation into its 3 different components before I started building it.

These were:-

Sound

First was what samples I wanted to incorporate in the installation. Was it going to be small audio samples or larger ones that would be triggered over a course in time? Was the user going to generate his own melodies or if a preset was to be determined. This was tricky and took some back and forth but I finally opted for the small audio samples that the user would trigger when they moved their fingers. Each individual sample would be mapped to the different sample and the hand would act as a drum machine with users cuing a clap, hi-hat, kick etc with their fingers and triggering an event if they move the associated finger down far enough towards the leap motion

Video

Secondly was deciding the visuals that would go together with the accompanying audio. For this I had to decide if the visuals would be mapped to the same system that controls the audio or create an entirely different set up that affects the visuals so that there is they had completely independent . I opted for the same audio cues that trigger the audio samples to also change the visuals. Then I created a particle system to act as the visuals for the installation. I decided to use jit.mo a Max library to create a circle and other shapes that the user would be able to select and manipulate the speed, frequency and scale depending on their finger location.

Gestures

Lastly, I had to decide which gestures I would get from the leap motion and assign to Max and how they would go together with the audio and visuals of the installation. I opted for simple gestures. For example the change in the Y position of the finger tips would trigger an event for a specific finger if it passes the threshold. Opposed to the motion of various fingers to trigger one singular cue.

Welcome to Audio Odyssey!

Audio Odyssey is an interactive art installation that hopes to marry audio synthesis with gestural motions aided by visuals to display feedback for the user actions. The motion in the user’s fingers trigger audio samples that are mapped them. The fingers also act as the control system of the particle system that serves as the visual representation of the audio.