Final project for MTEC2280 Ins and Outs class in Spring 2022.

Interactive | Sound Reactive | Visual Design | System Design | Processing | Arduino | Electronic

Brief Description:

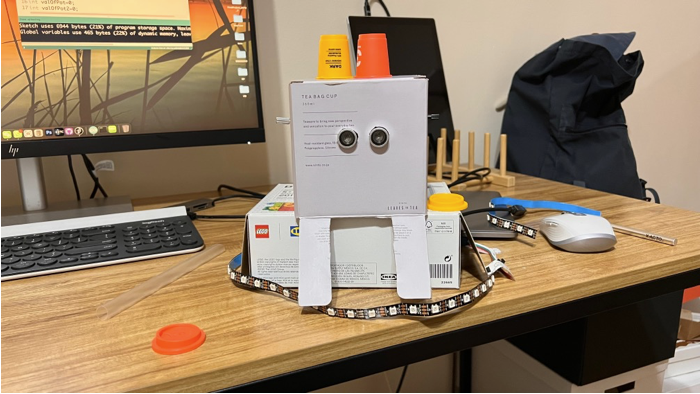

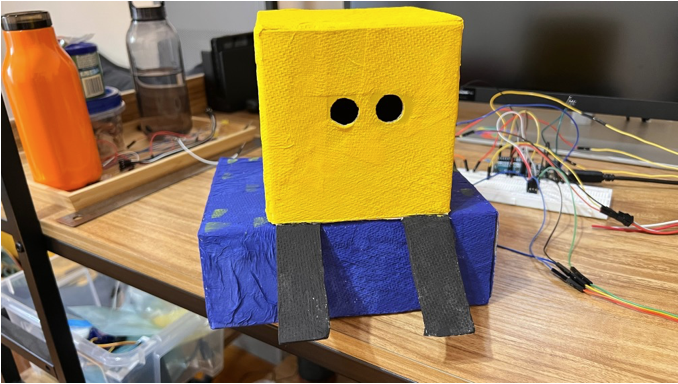

1- Project Overview: The project is about to build an interactive music visualization system embedded within a physical object, serving as an interesting desk toy. This system detects human proximity and responds to user inputs through various sensors and control mechanisms. The project’s central concept is centered on the dynamic synchronization of visual patterns with the amplitude of a music track. The primary goal is to enhance user engagement and create a captivating user experience.

2- Physical Object:

- The desk toy is the primary physical interface.

- Equipped with:

•Ultrasonic distance sensor: Detects user presence.

•LED strips: Display dynamic visual patterns and colors.

•Two potentiometers: Enable user control and parameter adjustment.

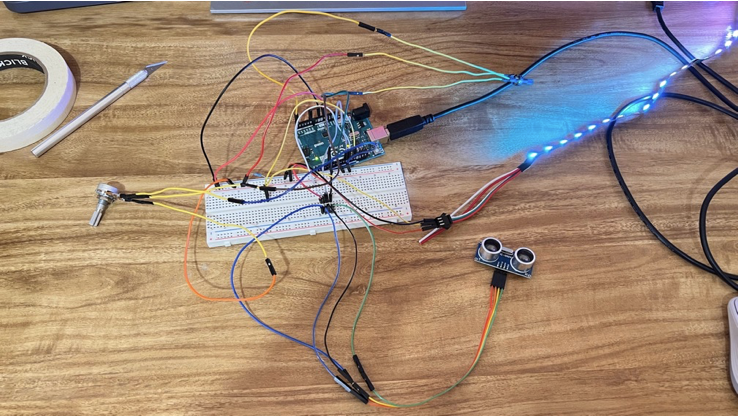

3- Arduino Microcontroller:

- Facilitates communication between the physical object and the software components(In Processing).

- Reads data from the ultrasonic distance sensor and potentiometers.

- Sends and receives data with the Processing environment via the serial port.

- Uses the amplitude data from Processing to control the RGB color of LEDs.

4- Processing:

- User Interaction:

•Utilizes mouse input for user interactions, including selection and triggering of actions.

•Potentiometer values are incorporated to allow real-time parameter adjustments.

- Music Visualization:

•Uses the Amplitude Analyzer from the sound library to analyze music track amplitudes.

•Employs Map function and animation techniques to create dynamic visual patterns.

Process:

1- Initial idea: User can interact with the light, sound, and screens by sensing distance and lightness of space. When user get close to the physical object, sound and light are triggered to attract user to interact with it. For example, when user get into the detection area, the lively music will be played when the space is bright. A user guide will appear on the screen to prompt the user on how to interact with the device, such as the pattern of LEDs or pattern on the screen will be changed based on the distance between the user and distance sensor.

Initial sketch

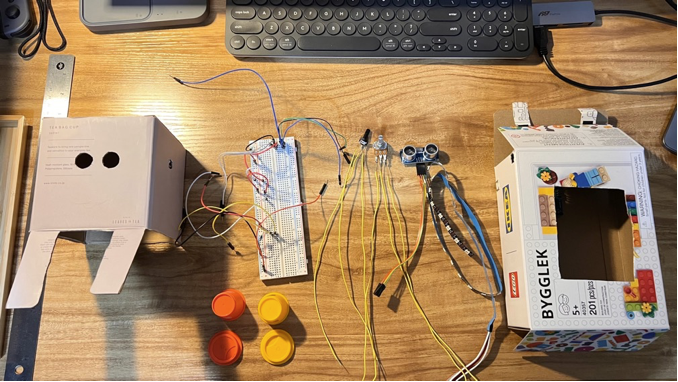

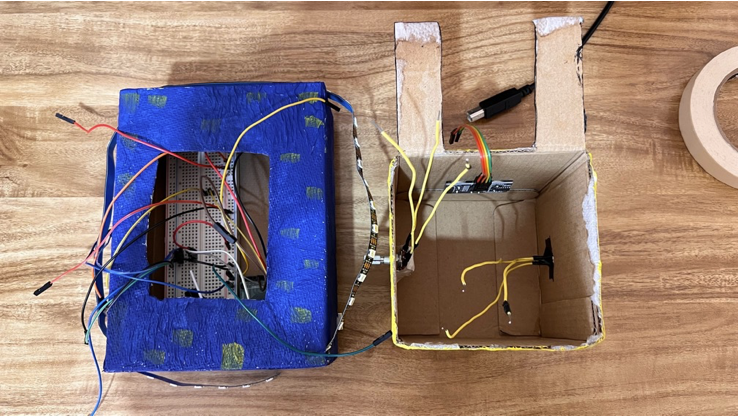

2- Protoype:

Revising the idea to another version of the project based on the sensor testing. (I think the ultrasonic distance sensor isn’t an appropriate sensor to perform such data transfer task.) After doing more researching, I decided to use the amplitude analyzer from the sound library of Processing to create a dynamic pattern. Also, by adding two potentiometers and a mouse device into the system, allowing user to interact with the toy in a different way.

3- Fabrication:

Material: Two boxes, a few mini instant coffee cups, paper towels, white latex glue, watercolor paint, a few jumper wires, bread board, Arduino, LED strip, Ultrasonic distance sensor, two potentiometers

Github link: https://github.com/yinglianliu/InsAndOuts_Yinglian/tree/main/FianlProject_Final